[See the UPDATE section at the end of this post to know about the relationship between Sngular and MeaningCloud, as of June 1st, 2017.]

I am thrilled to announce that Daedalus, the company that I founded in 1998 in Spain, is now part of the Sngular group. This operation is part of a merger of five complementary IT companies to form a corporation based on talent and innovation, with the purpose of serving better our customers in times of accelerated changes.

I am thrilled to announce that Daedalus, the company that I founded in 1998 in Spain, is now part of the Sngular group. This operation is part of a merger of five complementary IT companies to form a corporation based on talent and innovation, with the purpose of serving better our customers in times of accelerated changes.

As a consequence of this M&A operation, Daedalus has been renamed Sngular Meaning, raising series C funding from Sngular, its parent company, to accelerate its international development.

What does this deal mean for MeaningCloud?

MeaningCloud LLC is the branch of Sngular Meaning in the United States, in charge of development and marketing of our text analytics services. It is our strategic bid to consolidate as an international reference in the field of semantic technologies.

MeaningCloud LLC is the branch of Sngular Meaning in the United States, in charge of development and marketing of our text analytics services. It is our strategic bid to consolidate as an international reference in the field of semantic technologies.

For MeaningCloud, this deal assures:

- The financial resources for a faster expansion of our international business.

- New marketing channels through cooperation with our Sngular sibling companies.

- New opportunities to build specific solutions for vertical markets.

Some figures about MeaningCloud (that quickly become obsolete):

- 5,000 registered users.

- 1,000 active users in the last month.

- 5 million API calls per day.

What is the structure of Sngular?

The companies that form the Sngular group are:

In total, we add up to a total of 300 people, with branches in the United States, Mexico and Spain. We define ourselves as a talent tech team. We will be visible under the domain sngular.team. Our CEO is Jose Luis Vallejo.

Regarding Sngular Meaning, besides the incorporation of Jose Luis Vallejo to the Board of Directors, there are no other changes at the management level. On my side, I will continue as President at Sngular Meaning and CEO at MeaningCloud. We can assure the continuity of our strategy around our trademarks MeaningCloud and Stilus.

When is the kick off?

On October 8th we will make a public presentation of the new Sngular group. This will be an event for employees and customers (by invitation only), but we plan other open events for later.

This is an exciting moment for us. We look at the future with confidence. I am sure that, as members of the Sngular family, we will continue enjoying the affection and support of all of you: customers, business partners and friends. Wish us luck and thank you for remaining at our side!

UPDATE as of June 1st, 2017

Almost two years later, anybody can see that the Sngular merger was a great success. What was founded as an umbrella corporation formed by five sister companies is now a strong IT company with multinational presence, where four of the original founding companies are fully merged. Besides this, other companies have been merged into Sngular through different mechanisms during the last months.

Regarding Sngular Meaning, we have jointly decided to integrate the service-oriented Data Science and Big Data activities in Sngular, while retaining all the activities and assets in the area of Text Analytics and Natural Language Processing in general. The Spain-based company Sngular Meaning (formerly Daedalus) has been renamed MeaningCloud Europe SL, owning 100% of the US-based company MeaningCloud LLC. Sngular maintains a non-controlling interest in our renewed and renamed company.

Long live Sngular! Long live MeaningCloud!

Jose C. Gonzalez

I am thrilled to announce that Daedalus, the company that I founded in 1998 in Spain, is now part of the

I am thrilled to announce that Daedalus, the company that I founded in 1998 in Spain, is now part of the  MeaningCloud LLC

MeaningCloud LLC

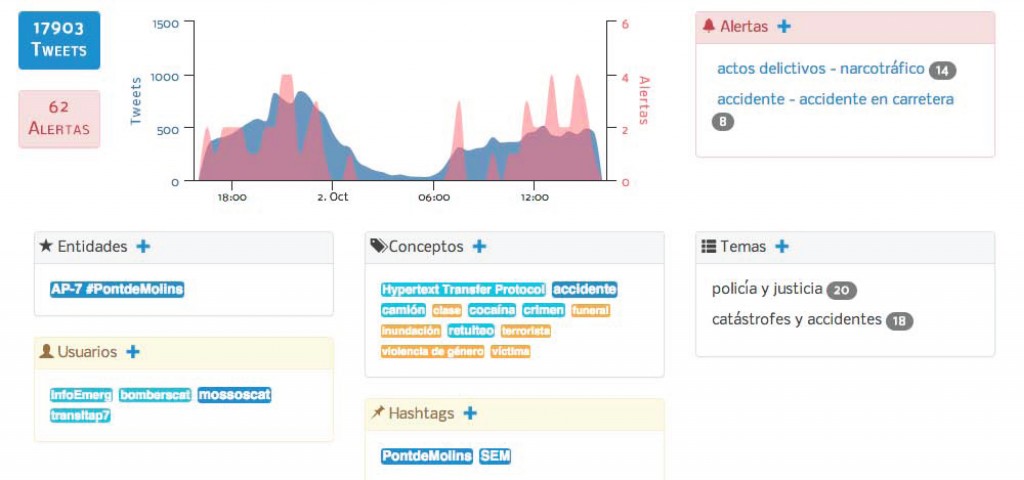

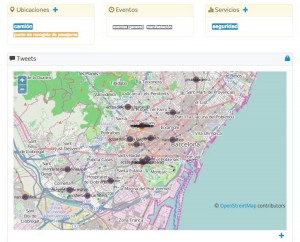

A multidimensional social perspective

A multidimensional social perspective