Build/Test a model

Once you have created a model and its categories with their training texts, term lists or both, you can update the binary files for the classification system by building the model.

This can be achieved through the Build model action, clicking the Build button in the sidebar of the different model views. Doing this, you ensure that you are using the most recent version of the model for your classification task, and that all the rules, stopwords and different settings are working properly.

In general, the model is automatically build when you carry out changes over it, but in some instances it can be useful to be able to force the build. For instance, if the status of the model is "outdated", then you will need to build it to regenerate the compiled version of the model using the latest version saved.

After that, you can evaluate how is it working and then polish the results if necessary.

MeaningCloud provides a testing tool for this purpose. You can access it by clicking the Test button either in the models dashboard, in the sidebar of the different model views or in the build page. You will enter the Text Classification API Test Console with your license key and your model already selected.

Did you notice...?

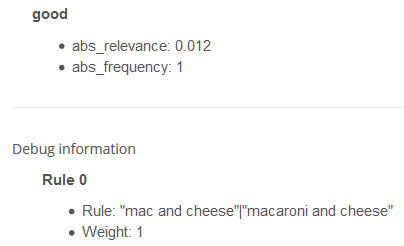

When you click the Test button on a model, the verbose and the debug will be automatically selected in the Test Console. This will show in the results a term_list with the words used to classify the text in a category as well as any rules that may have been triggered in the classification.

This is very helpful when tuning your models.

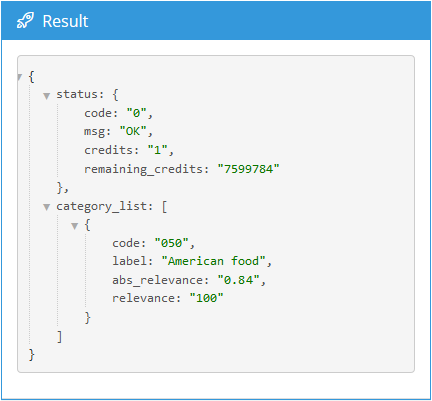

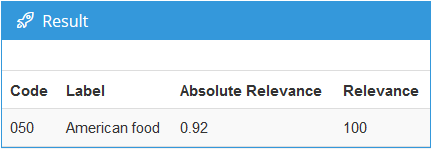

The results are shown in two possible formats: raw, with the results as the API returns them, in a JSON format (image on the left), and formatted, in a more visual way (image on the right).

In the list of categories, the ones that apply for the text are shown with these fields:

- Code: category identifier.

- Label: category label.

- Relevance: relative relevance assigned to the category.

- Abs relevance: absolute relevance obtained in the classification.

- Term list: terms of the text used to classify it in the category, whether it is for being them a term defined in the rules or appearing in the training texts. These terms will appear only when the

verboseparameter is enabled. - Debug: rules that were triggered to assign the category to the text. These rules will appear only when the

debugparameter is enabled (and rules are defined).

The fields that will help us the most will be the term list and the debug with the rules triggered, as it will show us why a text was classified as it was.

Important

If the model has rules in its term lists, the field form will show terms from all the lists. In other words, terms that diminish relevance to a category will also be shown.

Recommendation

If you want to go into detail about model testing and tuning, refer to the fine tuning section.