Test Parser

As we have seen in the previous section, there are many operators and aspects that we can use and combine when defining a rule. Many of these aspects depend on the morphological analysis carried out by our engines.

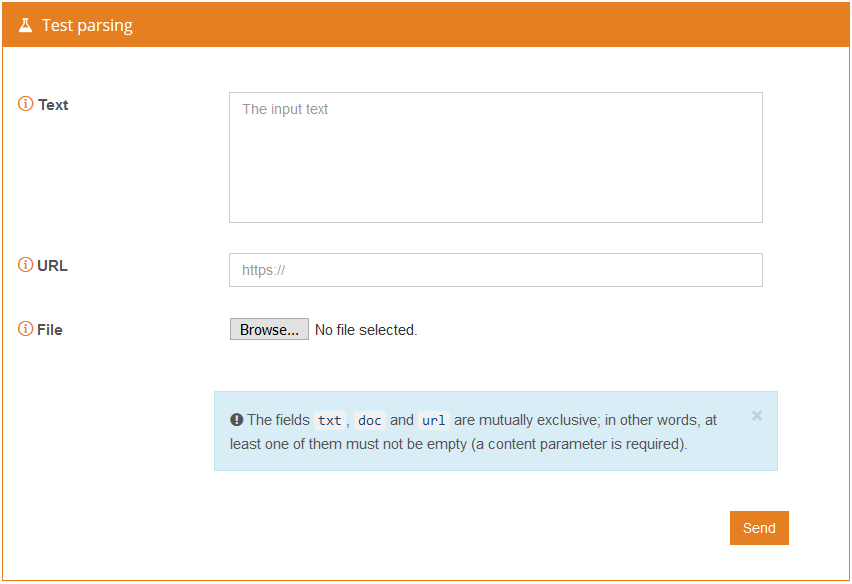

In order to enable you to write rules effectively we provide the test parser console, accessible from the corresponding button on the sidebar on the left:

This console can provide key information which can be of assistance for rule definition: tokenization and morphological and semantic aspects.

Tokenization is very important as it indicates when parsing multiwords should be used thus, illustrating how the distance between tokens should be counted when using distance operators. By including additional grammatical features, such as semantic information (sementity, semtheme and/or semgeo), form (F@) and lemma (L@), you will be increasingly assured that the defined rules apply to the case you have in mind.

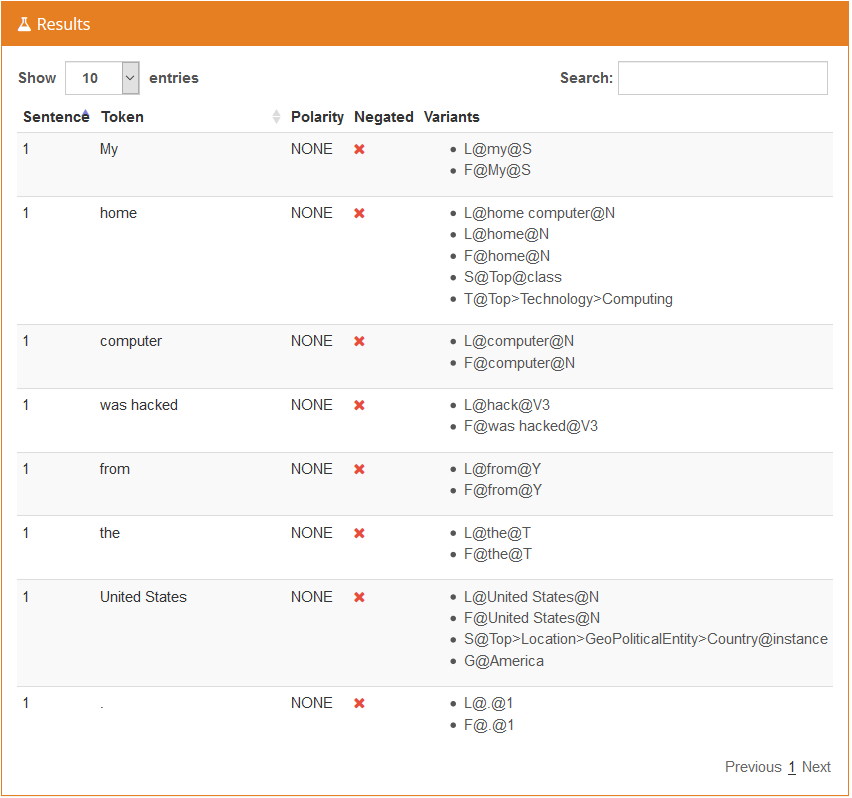

The following image shows the result obtained for the sentence "My home computer was hacked from the United States.".

The output is displayed in a table consisting of one row per token and five columns per row:

- Sentence: The number of the sentence the token belongs to, starting from 1. This may be useful to know the sentence separation that has been carried out.

- Token: The lexical form of the token.

- Polarity: The polarity value assigned to the token (and only relevant when the model is set to detect polarity).

- Negated: Shows if the token in question is affected by a negation in the text.

- Variants: The different variants available for the token.

It's important to consider that the variants generated are the most verbose ones (and equally the more restrictive ones). For instance, we've mentioned before, if something can be a lemma and the form feature (@F) has not been specified, by default it will be considered a lemma. This means that, if we see in a variant the possibility of using "L@hack@V3", then the term "hack" appears in a rule, it will be valid, as by default it's considered a lemma, and there's no filtering for the part of speech (all of them are considered, including @V3).

Semantic information is also shown in the test parsing results. Again, the version shown here is the more restrictive one. This especially affects the ontology entity type, and it's appended if the analysis is associated to an entity (suffix @instance) or a concept (suffix @class). For more information, please click here.

Did you notice...?

Auxiliary verbs are grouped with the verb they refer to, which sometimes implies that the order of the tokens in the output may not be the same as the one in the text. In the example used, "was" is analyzed with "hacked", and so if the word "never" appeared in the text ("My home computer was never hacked from the United States") it would appear right before "was hacked".