I’m thrilled to announce that MeaningCloud Europe (formerly Daedalus), the company I started in 1998, is now a part of Reddit. You can read the full announcement of this acquisition on Reddit’s blog.

You all know Reddit, of course, the online platform hosting more than 100,000 communities where people can discuss and chat about their interests, hobbies, and passions.

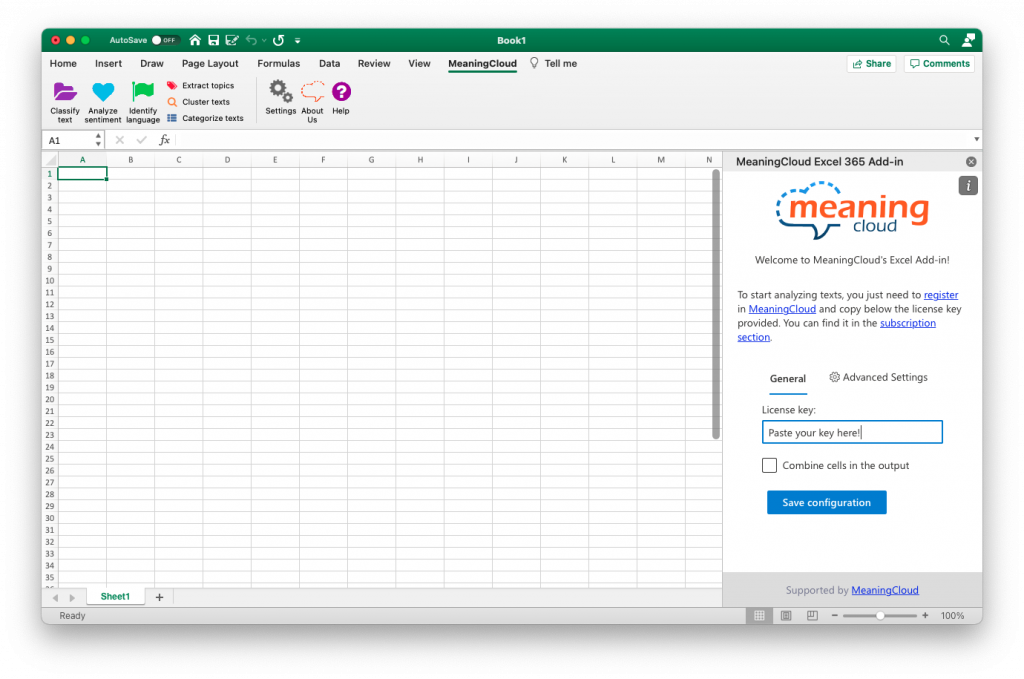

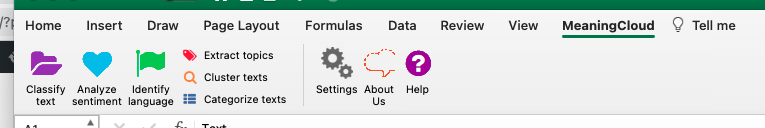

And you know MeaningCloud, the Language Technology company that competes (modestly but proudly) with IBM Watson, Google Cloud, Amazon Comprehend, and Microsoft Cognitive Services. Some of you may remember that we started offering public text analytics SaaS services under the name Textalytics in 2013, more or less simultaneously with the commercial launch of IBM Watson APIs.

As geeks, working on Content Understanding as part of the fantastic Reddit’s machine learning (ML) team, around the largest internet corpus of conversational content, is a dream come true. There is no need to explain the excitement of our team about the new challenges we face as part of Reddit.

At this point, some of you, users, clients, or friends who follow us, may be wondering what happens now with our current businesses. I’m happy to announce that, as part of the deal, we have transferred our clients to our shareholder Sngular. Sngular (Singular People, S.A.) is a global provider of IT services, a Spanish public company with 1,300 employees. At MeaningCloud, we are proud to have participated as founding partners of Sngular in 2015. In particular, Sngular is taking over:

- Our wholly-owned subsidiaries, MeaningCloud LLC and Konplik Health Inc. The first one will keep running our text analytics platform and servicing our clients and users worldwide (including 60,000 users of our free tier). Konplik will continue providing AI services to the health and pharma industry.

- Our stake at Expoune Inc., a services company built around exploiting MeaningCloud’s technology in different settings.

- Stilus, our automatic spell, grammar, and style assistant for Spanish, with 250,000 registered users, the Spanish checker preferred by media companies and professionals (translators, copyeditors, journalists, and lovers of the Spanish language).

- Our clients in different industries (consulting, finance, infrastructure, telecommunications, etc.) for which we develop specific embedded solutions (SaaS or on-prem) on top of our technology.

So, we are leaving our business in the best hands, under the direction of Cesar Camargo (Sngular CEO), Alma Miller (CEO of Sngular in the United States), and José Luis Calvo (head of Artificial Intelligence). It’s your baby now!

You may have your preferred case among pivoting companies: Netflix, IBM, Western Union, Android, Nintendo… They made substantial changes in their core activities or strategy over the years. What about us? Competing in hi-tech is (extremely) tough. Starting an Artificial Intelligence company in Spain in 1998 was evidence of madness (shared with my colleagues Juan R. Velasco and Luis Magdalena). And taking it to here (along with Antonio Laorden, José L. Martínez and Julio Villena) was against any odds. On the way, we pivoted our business several times. For example, in the last seven years, we took part in the birth of Sngular (following the vision of Jose Luis Vallejo), created two new US-based companies, and took a stake in Expoune (led by Robert Wescott).

On the technology side, however, we have followed a much more consistent path based on three principles: technological agnosticism, problem-solving mindset, and client orientation. For example, Stilus was our first Natural Language Processing (NLP) product. The newspaper El País was our first corporate client in the news industry in 2004. And, 18 years later, we keep maintaining Stilus, under the same license contract, despite the changes in technology, product, people, and content platforms.

Today, we pivot again to be part of a large and fast-growing company in the social media arena. And we face this challenge with all the experience and assets accumulated over the years. Time is the best judge of our actions. However, I’m sure about the great value that we will be contributing to Reddit in the coming years, configuring one of the leading organizations in the exploitation of NLP technologies, beyond the current hype around ML and Artificial Intelligence (AI) in general.

For me, all this means turning over a new leaf. Having got my Ph.D. in AI in the late 80s, 30 years as a university professor, 24 years since I started up my first company… I meet all the requirements to be the senior of “intern snoos” at Reddit. I’m committed to doing my part, side by side with my incredible team, under the direction of Jack Hanlon and Rosa Català, to contribute, in my poor power, to Reddit’s growth and international expansion. It sounds like a plan, right?

Thank you, and good luck to all the people (past employees, investors, clients, users, and friends) who have supported us along the road. And let’s meet on Reddit!

All the best,

Jose C. Gonzalez

References

References

Digital marketing is becoming a fundamental pillar, by leaps and bounds, in the business plans of practically every business model. Methods are being refined and the search for the connection between brand and user is expected to become increasingly more precise: a related advertisement is no longer sufficient, now the advertisement must appear at the right time and in the right place. This is where categorization proves to be an exceedingly useful tool.

Digital marketing is becoming a fundamental pillar, by leaps and bounds, in the business plans of practically every business model. Methods are being refined and the search for the connection between brand and user is expected to become increasingly more precise: a related advertisement is no longer sufficient, now the advertisement must appear at the right time and in the right place. This is where categorization proves to be an exceedingly useful tool.