Analyze the sentiment of social networks, reviews, or customer satisfaction surveys

Have you ever wondered what people say about you, your company or your products in social networks? Have you ever tried to analyze the tens of thousands of answers to open-ended questions in customer satisfaction surveys?

Sentiment Analysis (also known as Opinion Mining) applies natural language processing, text analytics, and computational linguistics to identify and extract subjective information from various types of content.

Advantages of Automatizing Sentiment Analysis Applications

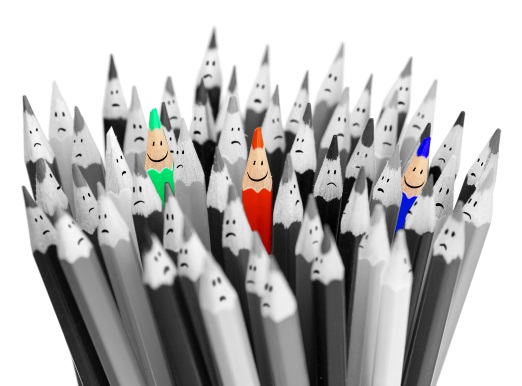

By automating of sentiment analysis, you can process data which, due to its volume, variety, and velocity, cannot be handled efficiently by human resources alone. It is impossible to extract the full value from interactions in contact centers, conversations in social media, reviews of products in forums, and other websites (in the thousands, if not hundreds of thousands) using exclusively manual tasks.

Voice of the Customer (or Citizen or Employee) analysis increasingly incorporates these sources of unstructured, unsolicited and instantaneous information. Moreover, because of their immediacy and spontaneity, these comments usually reveal the true emotions and opinions of those who are interacting.

Automatic Sentiment Analysis allows you the ability to process high volumes of data with minimum delay, high accuracy and consistency, and low cost, which complements human analysis in several scenarios:

Voice of the Customer (VoC) and Customer Experience management

Automatically analyze various sources of customer insights (like surveys and public social media conversations) and interactions in customer contact points.

Social media analysis

Easily build tools for social media monitoring and analysis by extracting opinions on a massive scale in real time.

Analysis of the Voice of the Citizen, Employee, Voter, and more

Analyze all sorts of channels to measure satisfaction (with work, public services, or socially) and identify opinions, trends, and emergencies.

MeaningCloud’s Sentiment Analysis API

Sentiment Analysis at attribute level

Our Sentiment Analysis API performs a detailed, multilingual sentiment analysis on information from different sources.

The text provided is analyzed to determine if it expresses a positive, neutral or negative sentiment (or if it is impossible to detect). In order to do so, the individual phrases are identified and the relationship between them is evaluated, which results in a global polarity value of the text as a whole.

In addition to the local and global polarity, the API uses advanced natural language processing techniques to detect the polarity associated with both the entities and the concepts of the text. It also allows users to detect the polarity of entities and concepts they define themselves, which makes this tool applicable to any kind of scenario.

Features of our Sentiment Analysis API

Thanks to highly granular and detalied polarity extraction, MeaningCloud's Sentiment Analysis API combines features that optimize the accuracy of each application.

Global sentiment

extracts the general opinion expressed in a tweet, post or review.

Sentiment at attribute level

detects a specific sentiment for an object or any of its qualities, analyzing in detail the sentiment of each sentence.

Identification of opinions and facts

distinguishes between the expression of an objective fact or a subjective opinion.

Detection of irony

identifies comments in which what is expressed is the opposite of what is said.

Graduated polarity

distinguishes very positive and very negative opinions, as well as the absence of sentiment.

Agreement and disagreement

identifies opposing opinions and contradictory or ambiguous messages.

What level of accuracy can the automatic sentiment analysis provide?

The first question that arises when talking about automatic sentiment analysis is: “How accurate is it?” Actually, saying that if accuracy is below a certain percentage, the solution is unacceptable is not a good idea. Accuracy and coverage are not independent; usually a compromise must be made. What constitutes acceptable performance depends on each case. For example, an anti-terrorism application might aim at 100% coverage, tolerating lower accuracy and false positives (that would be filtered by human reviewers). On the other hand, for other applications (e.g. brand perception in social media), it may be acceptable to have lower coverage in exchange for higher accuracy.

Nevertheless, aspects such as volume and latency are just as, if not more, important than the previous ones. If a human team can analyze hundreds of messages with 85% accuracy but a computer can process millions in real time with 75%, machines are a clearly a valid option.