It’s time to celebrate! We are rolling an update to our APIs that allows analyzing texts in fifty-seven languages. Our dream of multilingual text analytics based on our deep semantic approach has come true.

Until now, to analyze texts in different languages, we needed to maintain a model per language. This gives good results, although it was hard and expensive. We can do better.

Zero to near sixty in no time

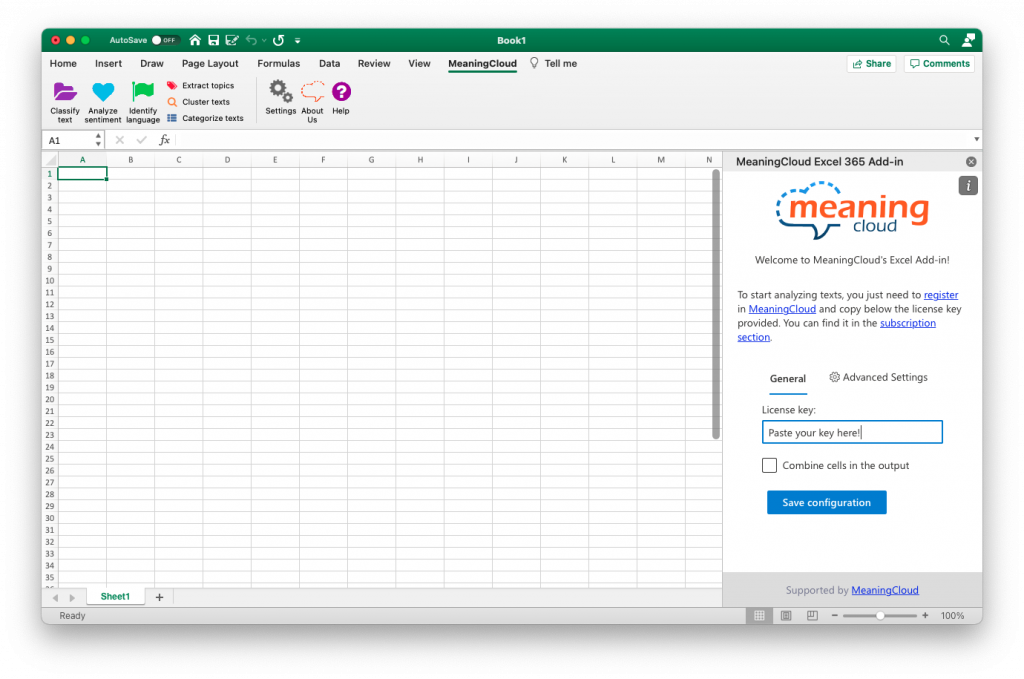

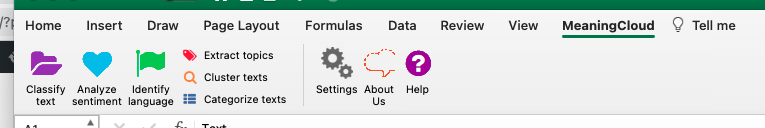

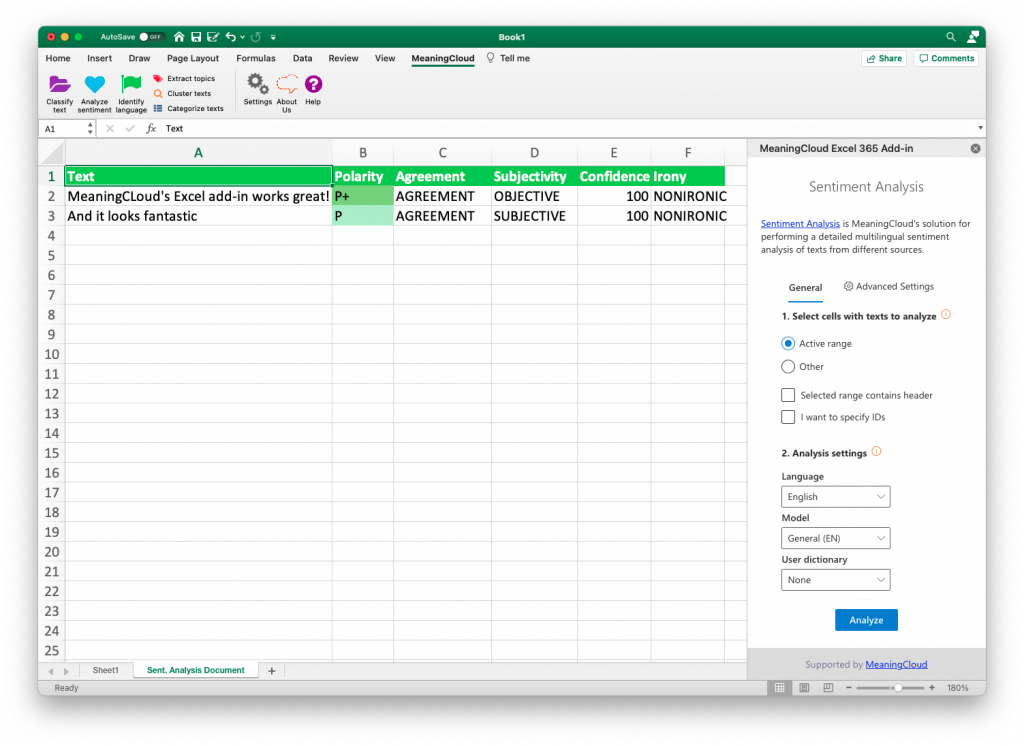

We have integrated our APIs with deep neural network technology to translate all these languages into English. Thanks to this, our users can analyze texts in many languages maintaining only one model. And no action is required. All the new languages will appear in your test console and are available in the APIs.

The complete list of languages includes Chinese, Hindi, Arabic, Russian, Japanese, Turkish, German, and many others that our customers have requested frequently. This adds to our current offering for English, Spanish, French, Italian, Portuguese, and the languages we were covering partially (in some APIs).

One of the most common and extensively studied knowledge extraction task is text categorization. Frequently customers ask how we evaluate the quality of the output of our categorization models, especially in scenarios where each document may belong to several categories.

One of the most common and extensively studied knowledge extraction task is text categorization. Frequently customers ask how we evaluate the quality of the output of our categorization models, especially in scenarios where each document may belong to several categories.